GDPR e IA: lo que necesita saber

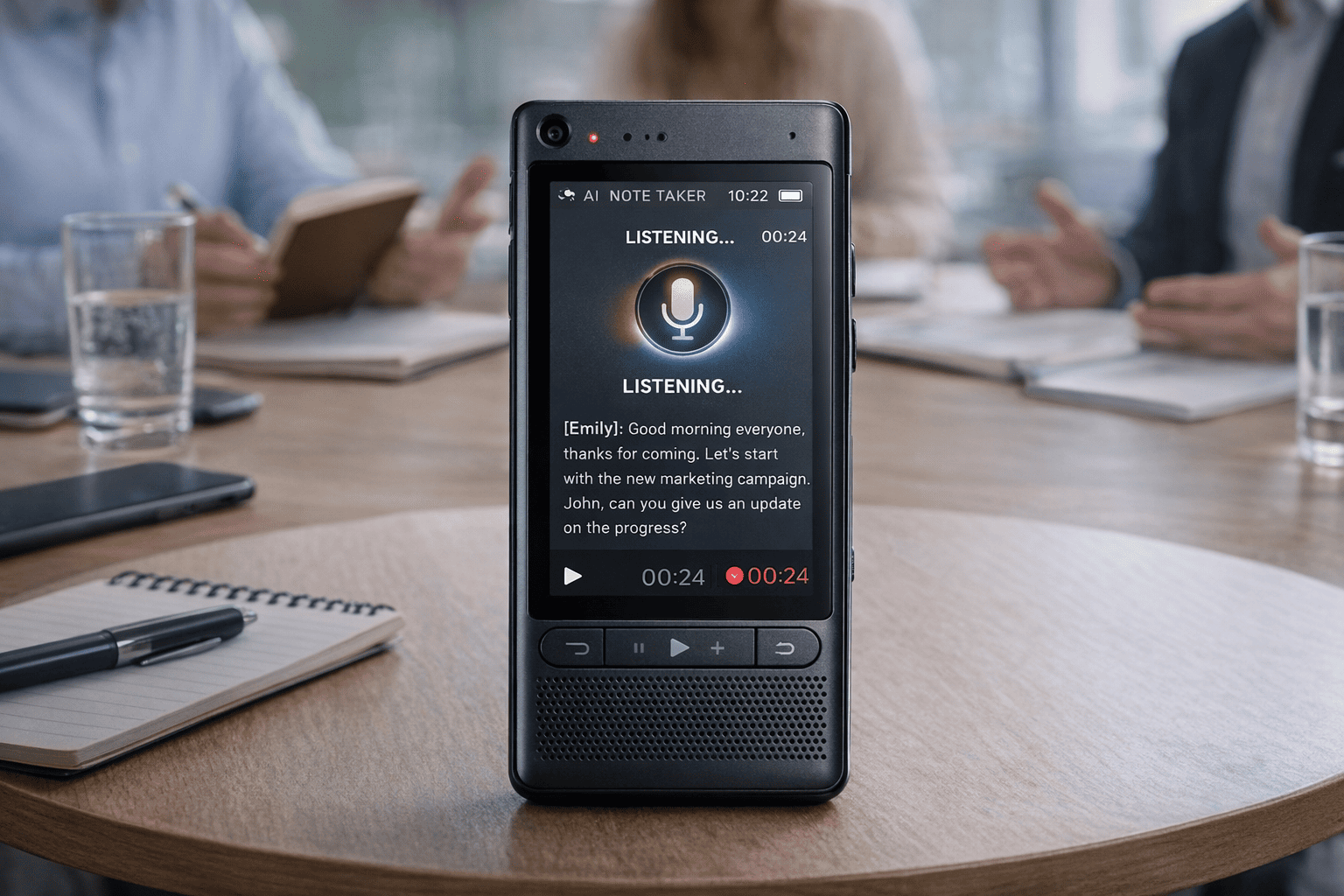

Haga el trabajo para cualquier reunión

Transcripción de reuniones, notas personalizadas con IA, integración de CRM/ATS y más

Si estás explorando la IA en la UE, hay una pregunta fundamental:

¿Cómo se beneficia de la IA sin infringir las normas del RGPD?

Este artículo ayuda a comprender cuándo se aplica el GDPR a la IA, cuáles son los mayores riesgos de cumplimiento y cómo diseñar sus flujos de trabajo de IA para que sean potentes y seguros para la privacidad

¿El GDPR cubre los usos de la IA?

Sí, en casi todas las situaciones realistas en las que se utiliza la IA para procesar datos personales, se aplica el Reglamento General de Protección de Datos (GDPR).

El RGPD rige el «procesamiento» de los datos personales. El «procesamiento» incluye la recopilación, el almacenamiento, el análisis, la elaboración de perfiles y la toma de decisiones automatizada, y sí, eso es exactamente lo que hacen muchos sistemas de IA. Aunque tu IA solo toque un nombre y un correo electrónico, o registre datos de comportamiento (IP, patrones de uso), se trata de datos personales según la definición amplia del RGPD.

Disposiciones clave del RGPD relevantes a la hora de utilizar la IA

Si integras la IA en tus flujos de trabajo, las siguientes reglas del RGPD son importantes:

- Base legal para el procesamiento: Debe tener una base legal (consentimiento, contrato, interés legítimo u otro motivo válido) antes de procesar datos personales con IA.

- Deber de transparencia e información: Debe informar a los titulares de los datos sobre cómo se utilizarán sus datos, incluso si se incorporan a la IA, qué tipo de elaboración de perfiles o decisiones automatizadas se pueden tomar y qué derechos tienen.

- Derechos de las personas: Las personas tienen derecho a acceder a los datos, solicitar su corrección, eliminación o restricción, también cuando los datos han sido procesados por una IA.

- Normas especiales para la elaboración de perfiles y la toma de decisiones automatizada: Si la IA toma decisiones sobre una persona (contratación, atribución de créditos, evaluación, puntuación de riesgo, etc.), el RGPD exige salvaguardias específicas: información significativa, derecho a la revisión humana, a objetar o solicitar una explicación.

- Minimización de datos y limitación de propósitos: Recopile solo los datos estrictamente necesarios para el propósito de la IA y no reutilice los datos más adelante sin un aviso claro o un nuevo consentimiento.

- Obligaciones de seguridad y responsabilidad: Los controladores de datos deben garantizar la seguridad adecuada (cifrado, control de acceso), las actividades de procesamiento de documentos y estar preparados para demostrar el cumplimiento.

GDPR e IA: qué desafíos y riesgos surgen

La implementación de la IA de acuerdo con las normas del RGPD aporta grandes beneficios, pero también riesgos de cumplimiento específicos.

⚠️ Riesgo 1: Procesar datos personales, incluso cuando no tiene la intención de hacerlo

Los sistemas de IA rara vez procesan solo datos anónimos. En muchos casos, ingieren nombres, direcciones de correo electrónico, registros de comportamiento, direcciones IP, identificadores de usuario y metadatos, o deducen nuevos atributos (preferencias de usuario, predicciones, puntuaciones). Según el RGPD, todo esto se considera «datos personales». La aplicación de algoritmos de inteligencia artificial a dichos datos implica obligaciones estándar para los controladores de datos.

Incluso cuando los datos parezcan «inofensivos», la capacidad de combinación, inferencia o reidentificación de la IA puede significar que el conjunto de datos contenga repentinamente datos personales, y el GDPR se aplica plenamente.

⚠️ Riesgo 2: Falta de transparencia y explicabilidad (la inteligencia artificial «caja negra» frente a los derechos del RGPD)

La IA es a menudo opaca. Los modelos de aprendizaje automático, los modelos de lenguaje extensivo y los sistemas generativos funcionan con una lógica interna compleja que es difícil de explicar de forma legible para los humanos.

Esa opacidad choca con el requisito de transparencia del RGPD: debes informar a los usuarios sobre cómo se procesan sus datos personales, cómo se elaboran perfiles o se toman decisiones automatizadas y con qué lógica. Cuando el resultado de la IA es una recomendación, una decisión o un resultado de elaboración de perfiles que afecta a personas, la falta de explicabilidad puede infringir las normas de equidad y transparencia del RGPD.

⚠️ Riesgo 3: el consentimiento y la base legal válida se vuelven complicados

El RGPD exige una base legal al procesar datos personales. En muchos contextos de IA, obtener un consentimiento válido es difícil: debes asegurarte de que sea informado, específico, otorgado libremente y revocable. Pero si más adelante vuelves a entrenar los modelos, reutilizas los datos o los incorporas a nuevos canales de IA, ¿sigue siendo válido el consentimiento original?

Alternativamente, puede alegar un interés legítimo, pero eso exige una estricta prueba de equilibrio: sus intereses frente a los derechos individuales. Para la elaboración de perfiles, la puntuación o la IA predictiva, demostrar ese equilibrio legal y ético se vuelve complejo.

⚠️ Riesgo 4: Minimización de datos y limitación de propósitos frente al apetito de datos de la IA

El GDPR consagra dos salvaguardias fundamentales:

- Minimización de datos — recopilar solo los datos realmente necesarios para su propósito.

- Limitación de propósito — no reutilice los datos para usos nuevos y no relacionados sin un nuevo consentimiento o base legal.

Sin embargo, la IA se nutre de los datos: más entradas mejoran la precisión, el modelado, la inferencia y la adaptabilidad futura. Existe una tensión natural: si alimentas a una IA con grandes conjuntos de datos «por si acaso», corres el riesgo de recopilarlos en exceso. Si más adelante quieres reutilizar los datos para nuevos experimentos de IA, corres el riesgo de infringir la limitación de propósito.

⚠️ Riesgo 5: retención, almacenamiento y replicación descontrolada de datos

El entrenamiento, el registro, la creación de modelos y las copias de seguridad de la IA: todo esto puede llevar al almacenamiento o la replicación indefinidos de los datos personales. Si no se anonimizan o seudonimizan estrictamente los datos, existe el riesgo de que los datos permanezcan en los archivos más tiempo del justificado o de que se propaguen entre entornos (copias de seguridad en la nube, ordenadores portátiles de desarrolladores, registros).

Esto viola el principio de limitación de almacenamiento del RGPD y aumenta la exposición, especialmente en caso de violación o acceso no autorizado.

⚠️ Riesgo 6: Decisiones automatizadas/elaboración de perfiles y derechos individuales

Cuando la IA genera evaluaciones, puntuaciones, predicciones o decisiones sobre las personas (idoneidad de la contratación, riesgo crediticio, ofertas personalizadas, elaboración de perfiles de usuario), el RGPD introduce obligaciones adicionales. Las personas deben tener:

- Información transparente sobre la lógica de decisión

- El derecho a obtener la revisión humana o impugnar la decisión

- El derecho a excluirse o solicitar la intervención

Si no concedes estos derechos (por ejemplo, ocultando la lógica de la IA o no ofreciendo un proceso de revisión), corres un riesgo legal y socava la confianza de los usuarios.

⚠️ Riesgo 7: trabajar con subprocesadores y proveedores de servicios de IA de terceros

Muchos equipos confían en proveedores de IA externos, API de IA basadas en la nube o herramientas SaaS. Sin embargo, cuando subcontratas el procesamiento de la IA, el RGPD no desaparece: sigues siendo el controlador de datos y tu proveedor se convierte en un procesador de datos.

Para ello se requiere:

- Un acuerdo de procesamiento de datos (DPA) adecuado, que especifique los flujos de datos, la ubicación de almacenamiento, la retención, la notificación de infracciones y las restricciones de exportación de datos.

- Garantiza que los datos permanezcan en jurisdicciones que cumplan con las normas (por ejemplo, la UE) y que los subprocesadores sigan los mismos estándares.

- Auditabilidad, registros y control sobre lo que se hace con los datos.

Cómo hacer que su sistema de IA cumpla con el GDPR

Con un enfoque reflexivo, puede crear o adoptar sistemas de IA que respeten la privacidad, protejan los datos de los usuarios y cumplan con los requisitos del GDPR

🔍 Paso 1: mapee sus flujos de datos: sepa qué datos usa y por qué

Antes de lanzar un proyecto de IA, empieza por documentar todo: qué datos recopilas, de dónde provienen, cómo fluyen por tus sistemas, cómo se procesan y dónde se almacenan. Esto incluye las entradas sin procesar, los metadatos, los registros, las salidas de los modelos, las copias de seguridad y cualquier intercambio de datos con terceros.

Este «mapeo del flujo de datos» le ayuda a identificar dónde entran o salen de su sistema los datos personales o confidenciales. Le permite evaluar los riesgos de forma temprana y aplicar las protecciones cuando sea necesario, en lugar de descubrir los problemas más adelante, cuando puede ser demasiado tarde.

📄 Paso 2: Elija una base legal y defina un propósito claro

El RGPD exige una base legal para procesar los datos personales. Para los usos de la IA, las bases comunes son:

- Consentimiento — cuando los usuarios aceptan explícitamente que la IA procese sus datos (por ejemplo, para la elaboración de perfiles o la personalización). El consentimiento debe ser informado, inequívoco y revocable.

- Ejecución de un contrato/interés legítimo — para los procesos internos, la prestación de servicios o las operaciones necesarias, si puede demostrar que el uso de los datos es proporcionado y está protegido por los derechos.

También debe definir y documentar limitación de propósito: ser explícito sobre para qué se utilizarán los datos y comprométete a no reutilizarlos para tareas no relacionadas a menos que obtengas un consentimiento renovado o una base legal. Esto reduce el riesgo de uso indebido y ayuda a mantener la confianza.

🧹 Paso 3: Minimizar y anonimizar o seudonimizar los datos cuando sea posible

Usa el principio de minimización de datos — recopile y procese solo lo que sea absolutamente necesario. Evite recopilar datos en exceso «por si acaso». Cuantos menos datos personales gestione, menor será el riesgo de cumplimiento.

Cuando sea posible, anonimizar o seudonimizar datos antes de introducirlos en la IA. La anonimización, en la que ya no se puede identificar a las personas, elimina muchas obligaciones del RGPD. La seudonimización reduce el riesgo y, combinada con la seguridad adecuada, te ayuda a mantenerte más seguro sin dejar de beneficiarte del procesamiento de la IA.

📢 Paso 4: Transparencia: informar a las personas sobre el procesamiento de la IA

Cuando la IA procesa los datos personales (especialmente la elaboración de perfiles, las decisiones automatizadas o la personalización), el RGPD exige que informe a los interesados. Esto significa proporcionar:

- Qué datos se recopilan

- Por qué y cómo lo procesa la IA

- Qué decisiones o elaboración de perfiles pueden tomarse

- Sus derechos (acceso, rectificación, supresión, oposición)

Utilice avisos de privacidad claros y accesibles. Evita la jerga. Si los resultados afectan a personas (por ejemplo, puntuación, clasificación, recomendación), explíquenlos en términos comprensibles. La transparencia genera confianza y ayuda a cumplir los requisitos legales.

🛡 Paso 5: Proporcionar derechos y supervisión humana, especialmente para las decisiones automatizadas

Si su sistema de IA toma decisiones o pronósticos que afectan a las personas (por ejemplo, calificación crediticia, elegibilidad para la contratación, personalización, evaluaciones de riesgos), el GDPR les otorga derechos:

- Derecho de acceso a los datos y justificación de la decisión

- Derecho a solicitar la revisión o revocación por parte de un humano

- Derecho a oponerse o excluirse de la elaboración de perfiles o de la toma de decisiones automatizada

Planifique un proceso de revisión o apelación: siempre que la IA influya en una decisión, asegúrese de que una persona al tanto pueda revisarla e intervenir. Documente las decisiones, haga que los registros estén disponibles y permita las correcciones si los datos o los resultados son incorrectos.

🔒 Paso 6: Utilice una infraestructura compatible y un manejo seguro de datos

Dónde se alojan sus datos, cómo se almacenan y cifran, quién tiene acceso, todo es importante. En el caso de las organizaciones con sede en la UE, opte por las herramientas y plataformas que almacenan datos en los centros de datos de la UE, respalden el RGPD y se comprometan con las normas de protección de datos.

Si utilizas servicios en la nube o de IA de terceros, firma un acuerdo de procesamiento de datos (DPA), asegúrese de que los subprocesadores cumplan y verifique que los datos no salgan de las jurisdicciones de la UE sin las medidas de seguridad adecuadas.

En el caso de los datos confidenciales, considere IA autohospedada o local, o la implementación local de modelos de código abierto: esto brinda el máximo control sobre la residencia y el manejo de los datos.

✅ Paso 7: documente todo y audite regularmente

El cumplimiento no es una tarea que se realiza una sola vez, es algo continuo. Mantenga un registro del procesamiento de datos, las decisiones tomadas por la IA, los registros de consentimiento, los diagramas de flujo de datos y cualquier evento de acceso o intercambio de datos.

Las auditorías periódicas ayudan a detectar problemas de forma temprana: consentimiento desactualizado, filtraciones de datos, flujos de datos inesperados, subprocesadores no aprobados. Ser proactivo le ahorra problemas más adelante si los reguladores solicitan una prueba de cumplimiento.

Las mejores herramientas y plataformas de IA que cumplen con el RGPD (o que respetan la soberanía de la UE y la privacidad)

No todas las herramientas de IA son iguales en lo que respecta al cumplimiento del RGPD, la soberanía de los datos y la privacidad.

🔹 Noota: herramienta de transcripción y documentación de reuniones, fundada en la UE y respetuosa con la privacidad

Por qué se destaca

Noota, una asistente de inteligencia artificial fundada en Europa para la transcripción de reuniones y la toma de notas, diseña su oferta teniendo en cuenta la privacidad y el cumplimiento. Para los equipos que necesitan grabar, transcribir o archivar reuniones (que pueden incluir datos personales), Noota ofrece una alternativa que respeta el RGPD. Evita confiar en servicios de transcripción opacos y alojados en el extranjero.

Cuando es un buen ajuste

Equipos híbridos o remotos que trabajan en toda Europa, departamentos de recursos humanos que gestionan entrevistas, reuniones con clientes, resúmenes internos: en cualquier lugar donde se discuta información confidencial. El uso de Noota ayuda a mantener el almacenamiento de datos, las transcripciones y los archivos bajo una gobernanza que cumpla con las normas.

PRUEBA NOOTA SOVEREIGN AI ASSISTANT GRATIS AQUÍ

🔹 Mistral AI: LLM de peso abierto con opciones soberanas

Por qué se destaca

Mistral AI es un proveedor de LLM europeo (francés) que hace hincapié en la apertura, la transparencia y, lo que es más importante, la capacidad de autohospedarse o desplegarse dentro de una infraestructura controlada. Sus modelos no se basan en API patentadas y opacas a las que solo se puede acceder desde jurisdicciones desconocidas. Esto le permite controlar dónde se almacenan los datos y cómo se procesan.

Cuando es un buen ajuste

Si crea herramientas internas, procesa datos confidenciales o regulados o quiere evitar la exportación de datos fuera de su control, Mistral le permite cumplir con las normas. Puedes enviar mensajes de texto a través de su modelo, gestionarlo localmente o en tu propia nube alojada en la UE y gestionar el flujo de datos de acuerdo con las normas del RGPD.

🔹 DeepL: inteligencia artificial de traducción e idiomas con sólidas raíces europeas y un historial de cumplimiento

Por qué se destaca

DeepL tiene su sede en Alemania y es ampliamente considerada como una de las mejores herramientas de traducción e inteligencia artificial lingüística. Para las empresas que necesitan comunicación multilingüe, traducción de documentos o colaboración internacional en Europa, DeepL ofrece una excelente calidad y credenciales de cumplimiento.

Cuando es un buen ajuste

Si trabajas en varios idiomas (marketing, documentación, atención al cliente, equipos internacionales) y necesitas una herramienta de traducción que respete el RGPD, la residencia de datos y la privacidad desde el diseño.

Haga el trabajo para cualquier reunión

Transcripción de reuniones, notas personalizadas con IA, integración de CRM/ATS y más

Related articles

Olvídate de tomar notas y

prueba Noota ahora

FAQ

En el primer caso, puedes activar directamente la grabación en cuanto te unas a una videoconferencia.

En el segundo caso, puedes añadir un bot a tu videoconferencia, que grabará todo.

Noota también te permite traducir tus archivos a más de 30 idiomas.

.svg)

.svg)

.webp)

.png)

.svg)