GDPR & AI : What You Need to Know

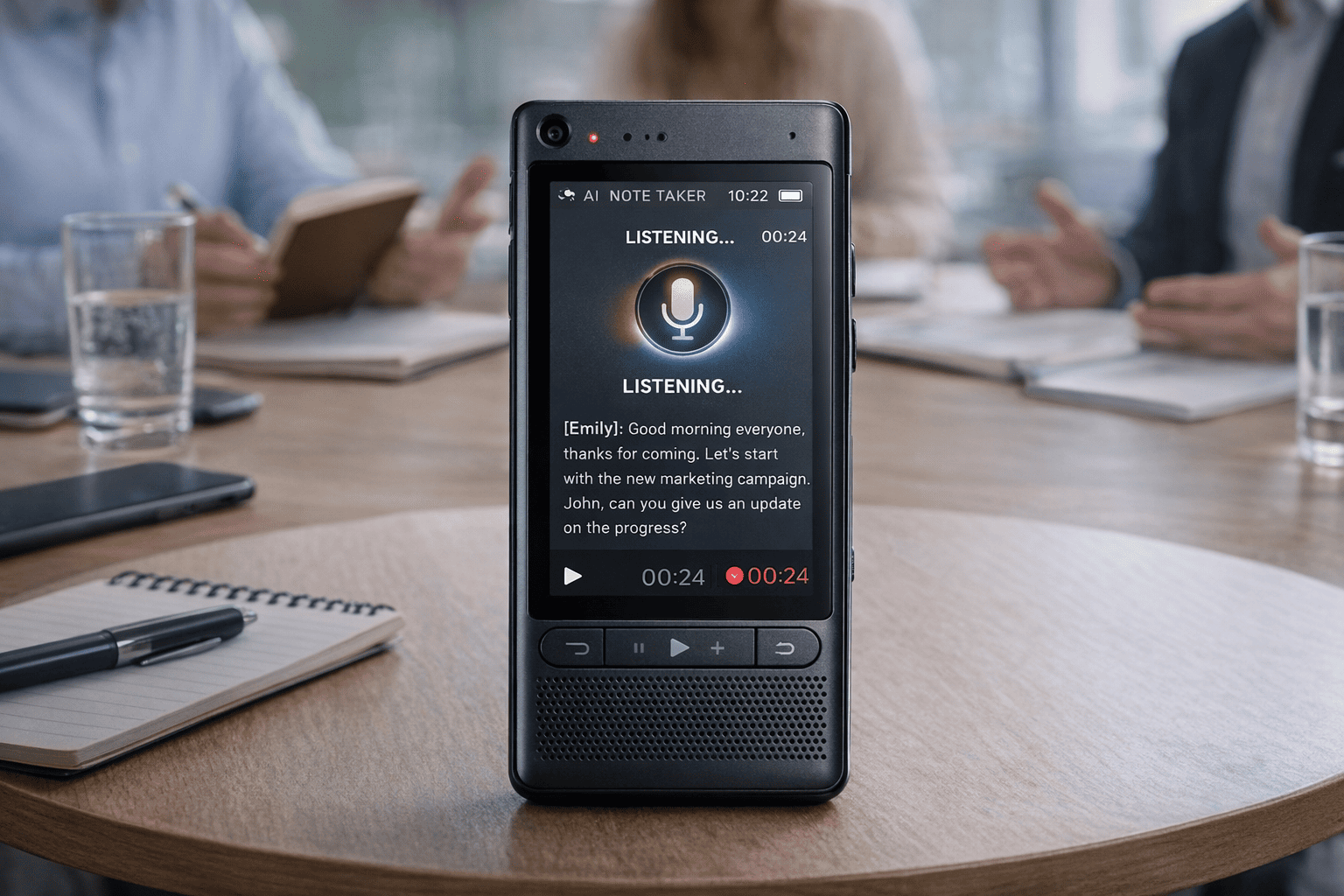

Get the work done for any meeting

Meeting transcription, AI custom notes, CRM/ATS integration, and more

If you’re exploring AI in the EU, there's one criticlal question:

How do you benefit from AI without breaking GDPR rules?

This article helps understand when GDPR applies to AI, what the biggest compliance risks are, and how to design your AI workflows so they’re both powerful and privacy-safe

Is GDPR covering AI uses?

Yes — in almost all realistic situations where you use AI to process personal data, the General Data Protection Regulation (GDPR) applies.

GDPR governs “processing” of personal data. “Processing” includes collection, storage, analysis, profiling, automated decision-making — and yes, that’s exactly what many AI systems do. Even if your AI only touches a name and email, or logs behavioral data (IP, usage patterns), that’s personal data under GDPR’s broad definition.

Key GDPR provisions relevant when using AI

If you integrate AI into your workflows, the following GDPR rules matter:

- Lawful basis for processing: You must have a lawful basis — consent, contract, legitimate interest, or another valid ground — before processing personal data with AI.

- Transparency & information duty: You need to inform data-subjects about how their data will be used, including if it feeds into AI, what kind of profiling or automated decisions may happen, and what rights they have.

- Rights of individuals: People have the right to access data, request correction, deletion, or restriction — also when data has been processed by an AI.

- Special rules for profiling/automated decision-making: If AI makes decisions about a person (hiring, credit, evaluation, risk scoring, etc.), GDPR requires specific safeguards: meaningful information, the right to human review, to object, or request explanation.

- Data minimization & purpose limitation: Collect only data strictly required for the AI’s purpose, and don’t repurpose data later without clear notice or new consent.

- Security & accountability obligations: Data controllers must ensure adequate security (encryption, access control), document processing activities, and be ready to demonstrate compliance.

GDPR & AI — What challenges and risks arise

Implementing AI under the rules of GDPR brings strong benefits — but also specific compliance risks.

⚠️ Risk 1: Processing personal data — even when you don’t intend to

AI systems rarely process only anonymous data. In many cases, they ingest names, email addresses, behavioral logs, IP addresses, user IDs, metadata — or infer new attributes (user preferences, predictions, scores). Under GDPR, all this counts as “personal data.” Applying AI algorithms on such data triggers standard obligations for data controllers.

Even when the data seems “harmless,” AI’s capacity for combination, inference, or re-identification can mean the dataset suddenly carries personal data — and GDPR applies fully.

⚠️ Risk 2: Lack of transparency and explainability (“black box” AI vs. GDPR rights)

AI is often opaque. Machine-learning models, large-language models, generative systems — they work with complex internal logic that’s hard to explain in human-readable form.

That opacity collides with GDPR’s requirement for transparency: you must inform users about how their personal data is processed, how profiling or automated decision-making happens, and on what logic. When your AI output is a recommendation, decision, or profiling result affecting individuals, lack of explainability can violate GDPR’s fairness and transparency rules.

⚠️ Risk 3: Consent & valid legal basis becomes tricky

GDPR requires a lawful basis when processing personal data. In many AI contexts, getting valid consent is hard: you need to ensure it’s informed, specific, freely given, and revocable. But if you later retrain models, reuse data, or feed it into new AI pipelines — is the original consent still valid?

Alternatively, you may claim legitimate interest — but that demands a strict balancing test: your interests vs. individual rights. For profiling, scoring, or predictive AI, proving that balance legally and ethically becomes complex.

⚠️ Risk 4: Data minimization and purpose limitation vs. AI’s appetite for data

GDPR enshrines two critical safeguards:

- Data minimization — collect only data truly necessary for your purpose.

- Purpose limitation — don’t repurpose data for new, unrelated uses without new consent or legal basis.

But AI thrives on data: more inputs improve accuracy, modeling, inference, and future adaptability. There’s a natural tension — if you feed an AI with large datasets “just in case,” you risk over-collecting. If later you want to repurpose data for new AI experiments, you risk violating purpose limitation.

⚠️ Risk 5: Retention, storage, and uncontrolled data replication

AI training, logging, model-building, backups — all these can lead to indefinite storage or replication of personal data. Unless you anonymize / pseudonymize data strictly, there’s a risk that data stays in archives longer than justified, or spreads across environments (cloud backups, developer laptops, logs).

That violates GDPR’s storage-limitation principle, and increases exposure — especially in case of breach or unauthorized access.

⚠️ Risk 6: Automated decisions / profiling and individual rights

When AI outputs assessments, scores, predictions, or decisions about individuals (hiring suitability, credit risk, personalized offers, user profiling), GDPR introduces extra obligations. Individuals must have:

- Transparent information about the decision logic

- The right to obtain human review or challenge the decision

- The right to opt out or request intervention

Failing to provide these rights — for example, by hiding AI logic or offering no review process — puts you at legal risk and undermines user trust.

⚠️ Risk 7: Working with third-party AI service providers & subprocessors

Many teams rely on external AI vendors, cloud-based AI APIs, or SaaS tools. But when you outsource AI processing, GDPR doesn’t disappear — you remain data controller, and your vendor becomes a data processor.

That requires:

- A proper data processing agreement (DPA), specifying data flows, storage location, retention, breach notification, data export restrictions.

- Guarantees that data stays in compliant jurisdictions (e.g. EU), and that subprocessors follow same standards.

- Auditability, logs, and control over what’s done with data.

How to make your AI system comply with GDPR

With a thoughtful approach, you can build or adopt AI systems that respect privacy, protect user data, and meet GDPR requirements

🔍 Step 1: Map your data flows — know what data you use and why

Before launching an AI project, start by documenting everything: what data you collect, where it comes from, how it flows through your systems, how it’s processed, and where it's stored. This includes raw inputs, metadata, logs, model outputs, backups, and any data sharing with third-parties.

This “data-flow mapping” helps you identify where personal or sensitive data enters or leaves your system. It lets you assess risks early and apply protections where needed — instead of discovering problems later when it may be too late.

📄 Step 2: Choose a lawful basis & define clear purpose

GDPR requires a lawful basis for processing personal data. For AI uses, common bases are:

- Consent — when users explicitly agree to their data being processed by AI (e.g. for profiling or personalization). Consent must be informed, unambiguous, and revocable.

- Performance of a contract / legitimate interest — for internal processes, service delivery, or necessary operations, if you can demonstrate that data use is proportionate and rights-safe.

You must also define and document purpose limitation: be explicit about what data will be used for, and commit not to repurpose data for unrelated tasks unless you get renewed consent or legal basis. This reduces risk of misuse and helps maintain trust.

🧹 Step 3: Minimize and anonymize / pseudonymize data when possible

Use the principle of data minimization — collect and process only what you absolutely need. Avoid over-collecting data “just in case.” The less personal data you handle, the lower the compliance risk.

When possible, anonymize or pseudonymize data before feeding it into AI. Anonymization — where individuals can no longer be identified — removes many GDPR obligations. Pseudonymization reduces risk and, combined with proper security, helps you stay safer while still benefiting from AI processing.

📢 Step 4: Transparency — inform individuals about AI processing

Where personal data is processed by AI (especially profiling, automated decisions, or personalization), GDPR requires you to inform data subjects. This means providing:

- What data is collected

- Why and how the AI processes it

- What decisions or profiling may happen

- Their rights (access, correction, deletion, objection)

Use clear, accessible privacy notices. Avoid jargon. If outputs affect individuals (e.g. scoring, ranking, recommendation), explain in understandable terms. Transparency builds trust — and helps meet legal requirements.

🛡 Step 5: Provide rights & human oversight — especially for automated decisions

If your AI system makes decisions or predictions that affect individuals (e.g. credit scoring, hiring eligibility, personalization, risk assessments), GDPR gives them rights:

- Right to access data and decision rationale

- Right to request human review or overturn

- Right to object or exclude from profiling or automated decision-making

Plan for a review or appeal process: whenever AI influences a decision, ensure a human-in-the-loop can review and intervene. Document decisions, make logs available, and allow corrections if data or output is wrong.

🔒 Step 6: Use compliant infrastructure & secure data handling

Where your data is hosted, how it’s stored and encrypted, who has access — all matter. For EU-based organizations, favor tools and platforms that store data in EU data-centres, support GDPR, and commit to data-protection standards.

If using third-party AI or cloud services, sign a data processing agreement (DPA), ensure subprocessors comply, and verify that data doesn’t leave EU jurisdictions without proper safeguards.

For sensitive data, consider self-hosted or on-premise AI, or local deployment of open-source models — this gives maximum control over data residency and handling.

✅ Step 7: Document everything & audit regularly

Compliance isn’t a one-time task — it’s ongoing. Keep logs of data processing, decisions made by AI, consent records, data-flow diagrams, and any data access or sharing events.

Regular audits help you catch issues early: outdated consent, data leaks, unexpected data flows, unapproved sub-processors. Being proactive saves you trouble later if regulators ask for proof of compliance.

The Best GDPR-Compliant (or EU-Sovereign / Privacy-Friendly) AI Tools & Platforms

Not all AI tools are equal when it comes to GDPR compliance, data-sovereignty, and privacy.

🔹 Noota — meeting transcription & documentation tool, EU-founded and privacy-aware

Why it stands out

Noota — a European-founded AI assistant for meeting transcription and note-taking — designs its offering with privacy and compliance in mind. For teams needing to record, transcribe or archive meetings (which may involve personal data), Noota provides a GDPR-conscious alternative. You avoid relying on foreign-hosted, opaque transcription services.

When it’s a good fit

Hybrid or remote teams working across Europe, HR departments handling interviews, client meetings, internal recaps — anywhere sensitive information is discussed. Using Noota helps keep data storage, transcripts, and archives under compliant governance.

TRY NOOTA SOVEREIGN AI ASSISTANT FOR FREE HERE

🔹 Mistral AI — open-weight LLM with sovereign-minded options

Why it stands out

Mistral AI is a European (French) LLM provider that emphasizes openness, transparency, and — importantly — the ability to self-host or deploy within controlled infrastructure. Their models aren’t locked behind proprietary, opaque APIs only accessible from unknown jurisdictions. That gives you control over where data is stored and how it’s processed.

When it’s a good fit

If you build internal tools, process sensitive or regulated data, or want to avoid data export outside your control — Mistral allows you to stay compliant. You can feed text through their model, operate it on premises or in your own EU-hosted cloud, and manage data flow according to GDPR rules.

🔹 DeepL — translation & language-AI with strong European roots and compliance record

Why it stands out

DeepL is based in Germany and widely regarded as one of the best translation and language-AI tools. For businesses needing multilingual communication, document translation, or international collaboration in Europe — DeepL offers strong quality and compliance credentials.

When it’s a good fit

If you work across languages — marketing, documentation, client support, international teams — and you need a translation tool that respects GDPR, data residency, and privacy by design.

Get the work done for any meeting

Meeting transcription, AI custom notes, CRM/ATS integration, and more

Related articles

Forget note-taking and

try Noota now

FAQ

In the first case, you can directly activate recording as soon as you join a videoconference.

In the second case, you can add a bot to your videoconference, which will record everything.

Noota also enables you to translate your files into over 30 languages.

.svg)

.svg)

.webp)

.png)

.svg)